In previous post , we have seen how to create retention policies and view run history of the retention polices. In this post let us see how to view the retained data and limitations of the data retention feature

View Retained Data

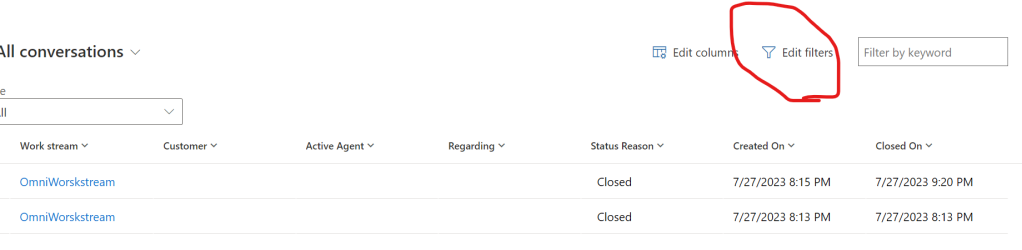

Now lets us see how to access these retained records in Dynamics. We can go to any view and click on edit filter.

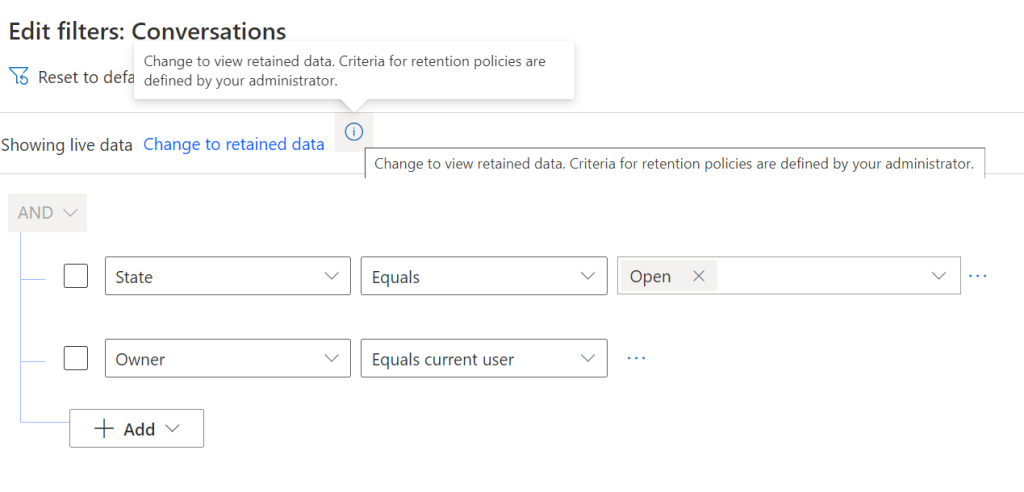

In the Edit filters we can see “Change to retained data”. By selecting this we can see the retained data

After we select this, it will prompt for confirmation and lets click Change to retained data

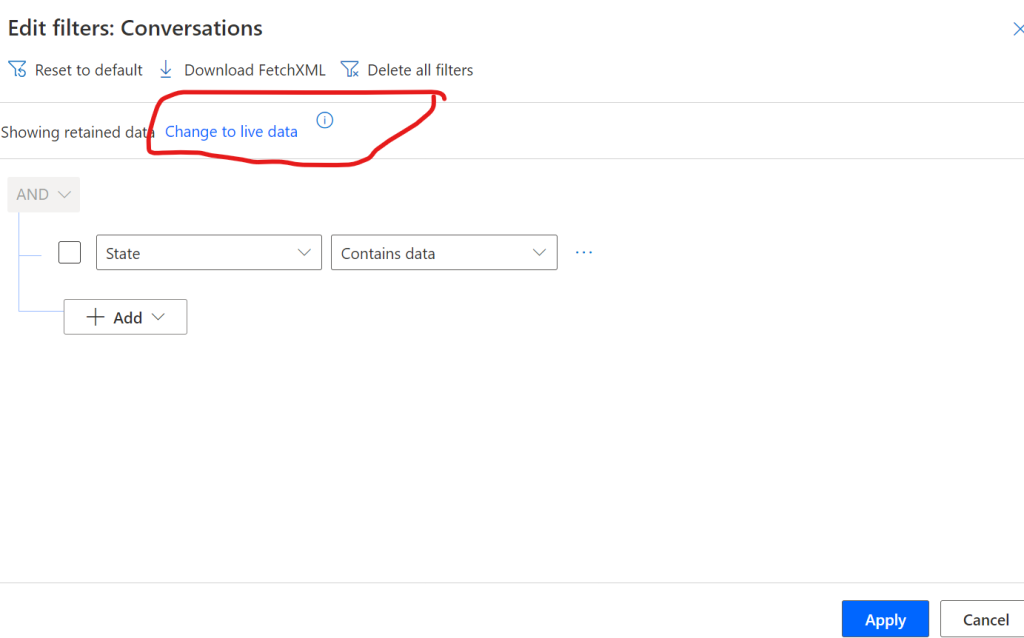

It will automatically flip the option to “Change to Live data” in case we want to see live data

If we observe , we can see “*” in front of the view stating that its showing retained data

Note- Once the data is successfully moved to data lake, this data is purged from the Dataverse data base. This can not moved back to the database

Limitations for retrieval of retained data

This restrictions are enforced by Dataverse for each environment:

- Up to five users can query and retrieve retained data at the same time.

- Up to 30 queries per day are allowed for each environment.

- Any single request from advanced find, Power Automate cloud flow, or Dataverse OData public API is considered as one query.

- Queries are allowed on one table at a time. Joins and aggregation functions aren’t allowed.

- Retained data includes lookup data. Lookup values in the table are denormalized with ID and name value.

Once we have established the retention policies now we need to review the impact on capacity reports. Lets us see how this is demonstrated in the capacity report

Capacity Reports

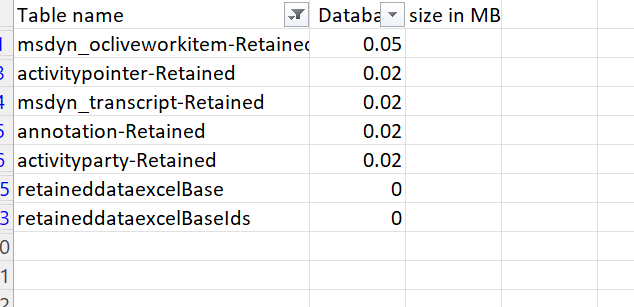

When we look at the capacity report after establishing the retention policy, at the moment retained data is also shown in the capacity reports, however for the retained data the tables are shown separately. If these tables don’t top in the storage use, we may download the list of tables and there we can find the tables with suffix of -Retained and their consumption.

We can filter the table names with Retained Suffix

We can see the table capacity usage.

Note - At the moment Microsoft has not provided any bifurcation on how they would charge for database storage vs Datalake storage and this is preview, hence this million dollar question is still unanswered. Also for each query to fetch the records for data retention, API requests available in our plan will be counted for these calls One good thing is these retention policies are solution aware, so unlike bulk deletion jobs, we do not have to recreate these in all environments separately.

Apart from looking for the archival solution, we also had to look at the reasons for such exponential increase in the data size post PVA + Omnichannel implementation. I will share few findings in my next blog post.